Apple Photo Smart Collections can be hilarious

Apple announced a new feature in iOS 10 where your iPhone can create smart collections of your photos in order to surface them to you whenever you want. They’ll show you vacation collections, best of’s, certain dates that seem memorable. They call this feature ‘Memories’.

The collections around locations and people are quite good! The logic here is quite easy, if you spend a short amount of time in a location, or take a lot of pictures there, group them together. Similarly the face and object recognition are pretty good as well and let you easily see a lot of pictures of anyone in your photo collection. I’m quite impressed with

Now where it breaks down is Apple’s ‘Best Of <X>’ collections. X can be last week, last 3 months, last year, perhaps more options I haven’t seen yet. Now the big question is how does Apple select for ‘Best’. Best is a rather arbitrary and personal decision. It seems to me they base it off of how many times you’ve shared that photo in various ways (iMessage, AirDrop, email, perhaps even when it’s selected from a 3rd party photo picker). This metric makes sense, the photos you send out the most were obviously the photos you wanted most visible. It should select for photos that you want shown and reduces the possibility of any photos you took that you regret or want to keep private from showing up in these collections.

Also they will let you press one button to make a movie that selects a small sample of these photos. I think it selects these again based on the highest-shared photos. Which is great for most people! For me… not so much.

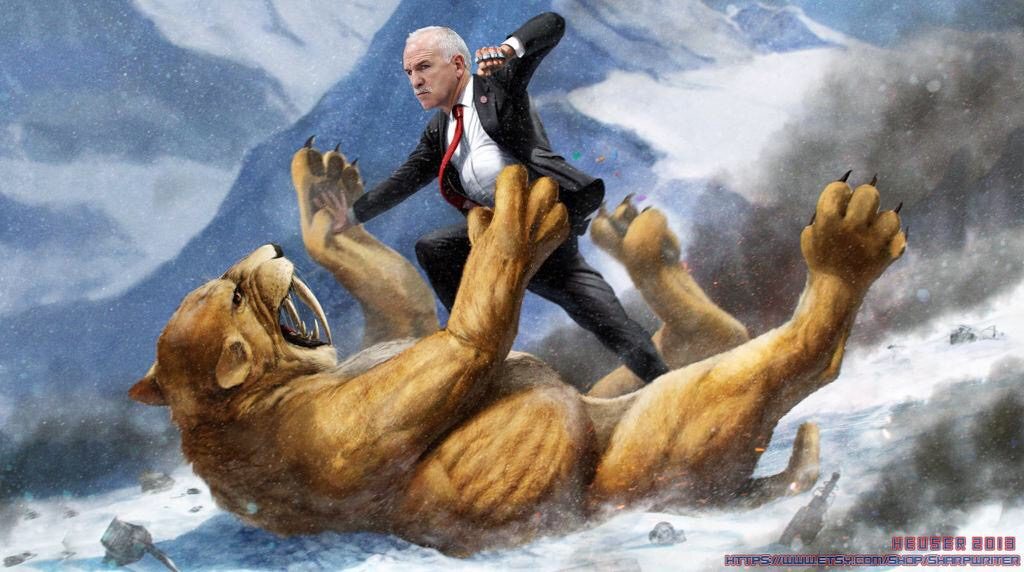

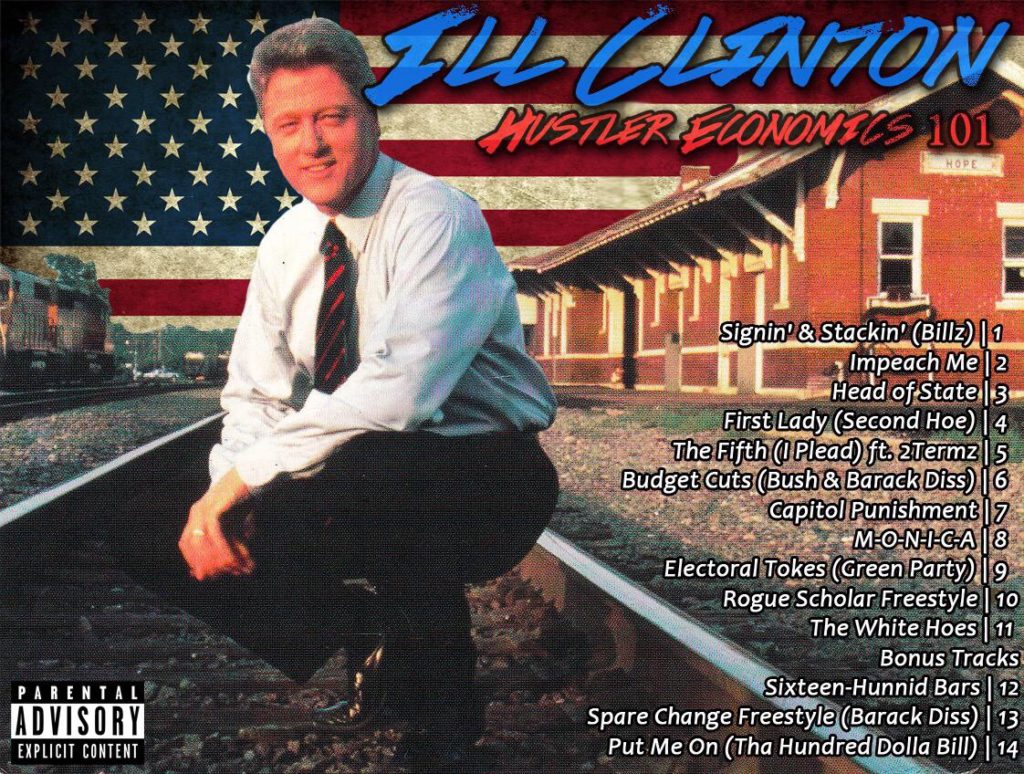

I don’t use photos like I imagine Apple imagines most people do. I do take a lot of vacation photos, a couple out at a bar or a restaurant, but I usually get those printed or make a photo book, I don’t email every one of my vacation photos to my family, just a couple while we’re there so they can see what it’s like. I also take photos for when I sell my furniture on craigslist (and don’t delete them) which I usually just AirDrop to my laptop to upload. I took a lot of pictures when house hunting and sent them to various family members to show what we’re seeing and get opinions. I also save memes to my camera roll to share with multiple sets of people. I save gifs to post to various locations. I take pictures of silly things that look ridiculous and send them off to various people. The Hockey community comes up with some of the best images to taunt your friends who root for other teams.

So when I go into one of these collections (Best of 2015 is my favorite) and just hit the play movie button, it’s not the myriad of vacation photos, or pictures out with friends that show up. Some of them do, but the video can only select around 10 or 15 photos. It’s going to choose the ones I sent to everyone! So I get the following (mixed in with one or two vacation photos etc) set to dramatic music:

One picture from my wife’s med school graduation, but plenty of craigslist photos, memes and other crap.

Based on how it selected these photos, I’m fairly confident Apple is relying on a metric weighted on number-of-shares-per-photo. Especially for the generated movies feature, as that is the one you’re most likely to simply hit play on and look at with people instead of going through and making sure the pictures are in fact what you want. And they must have been tracking shares for a while at least to prepare for this feature as well, as most of these are older pictures.

Also by preparing this blog post I’m pretty sure I weighted those metrics even more by airdropping all these photos to my laptop :).